➡️ Exact delivery dates may vary, so some features may not be immediately available on the publication date. For your specific access dates, please contact your LivePerson account team.

🚨The timing and scope of all changes remain at the sole discretion of LivePerson and are subject to change.

Copilot Translate EA - Bridge language gaps between agents and consumers

LivePerson is pleased to announce the Early Access (EA) release of Copilot Translate, an LLM-powered translation feature that translates inbound and outbound messages in real time. This feature is designed to bridge language gaps, allowing agents and consumers to communicate effortlessly in their preferred languages.

Key benefits

- For consumers: Instant understanding, faster issue resolution, reduced communication friction, and enhanced satisfaction by communicating in their native language.

- For brands: Accelerated market expansion, reduced operational costs, enhanced global reputation, improved customer retention, and competitive advantage.

- For agents: Optimized agent utilization, boosted productivity, reduced stress, higher job satisfaction, and streamlined training.

Language support

The only supported agent language is:

While LLMs are multi-lingual and can understand many languages, the consumer languages that LivePerson has fully tested and validated include:

All other consumer languages are considered unsupported by the system. We encourage you to try consumer languages other than English. However, rigorous testing of your specific use case is required to ensure quality.

How preferred languages are determined

To ensure accurate and effective communication, Copilot Translate determines the preferred languages of both the agent and the consumer using the methods outlined below.

In our EA release, Copilot Translate assumes that the agent’s preferred language is English.

To determine the consumer's preferred language, the system uses an LLM to analyze the most recent consumer turns in the conversation.

How automatic translation works

Incoming messages are translated automatically into the agent's preferred language. In turn, the agent composes their response, and they request that the system translate it into the consumer's preferred language.

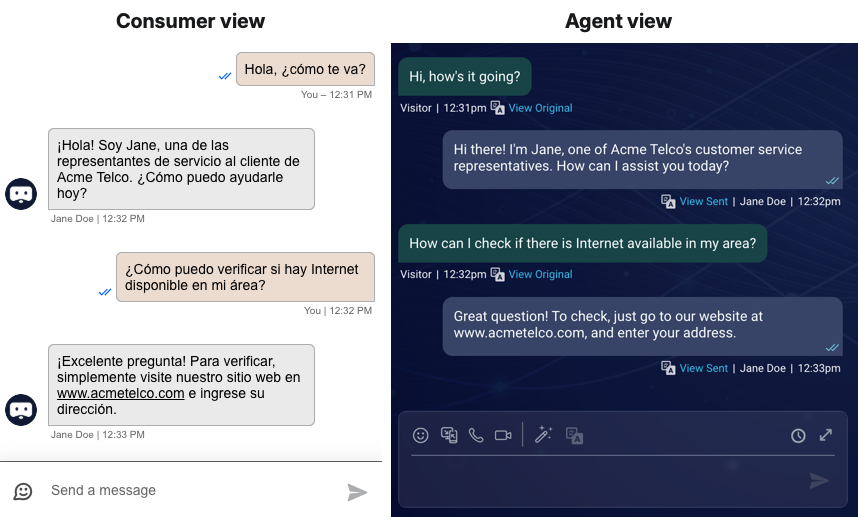

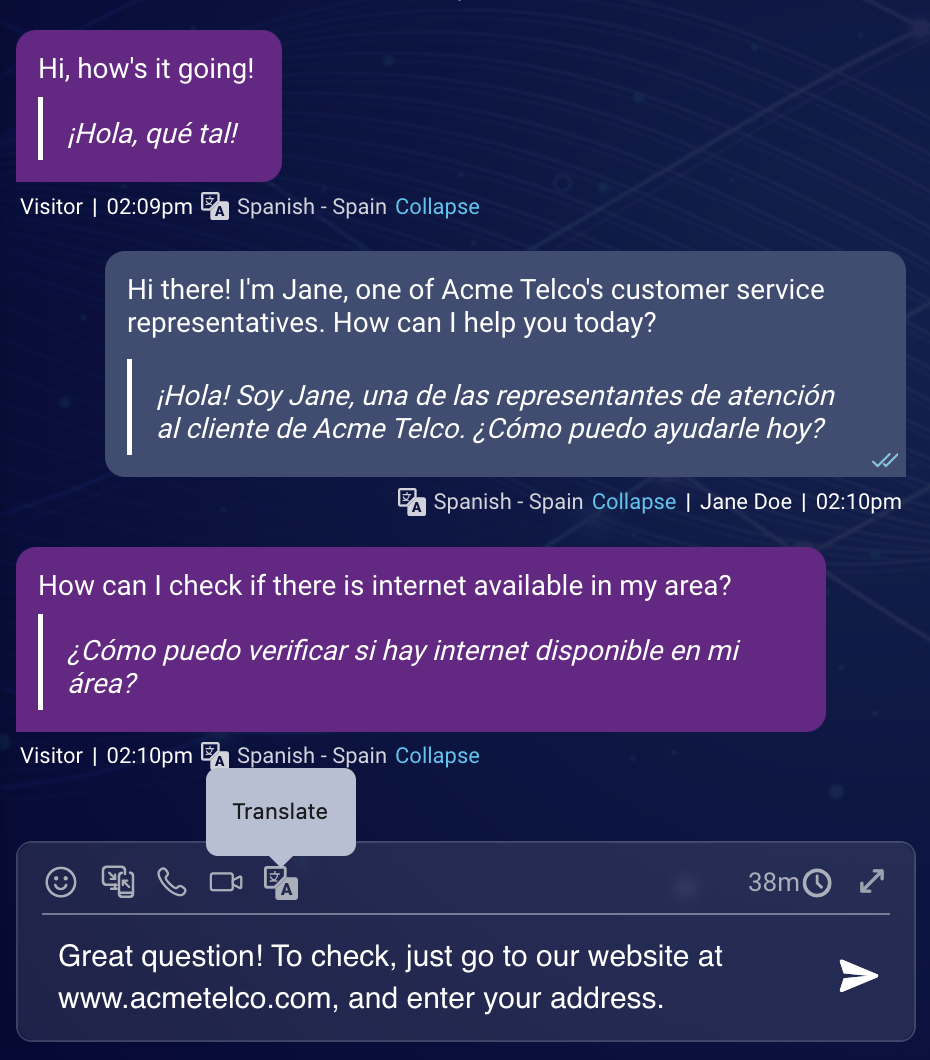

Below is a conversation between a Spanish-speaking consumer and an English-speaking agent. Note how the agent’s default view of the conversation is entirely in English, which aids their comprehension and makes response times faster.

If desired, the agent can view the actual messages received from and sent to the consumer:

Enablement

To have the feature turned on for your account, submit your request via this form.

Learn more

Copilot Rewrite - Skills map to a single semantic dictionary

Using semantic dictionaries to ensure consistent brand messaging and adherence to your specific terminology, word structures, and capitalization preferences? To enable Copilot Rewrite to use those dictionaries, you must assign them to skills, so the dictionaries can be used during conversations on those skills.

As of this release, a skill can be assigned to only one dictionary. This ensures the system always picks the correct dictionary for a rewrite, avoiding unpredictable results.

Conversation Builder - Release 4.0 features and enhancements

Conversation Builder - Knowledge-based bots can contextualize queries for better answers

Got a Conversation Builder messaging bot or voice bot that automates answers to consumers? There’s great news on this front.

By default, when a user’s query is used to find an answer (a matched article) in a knowledge base, that query is just a single utterance—the most recent one—in the conversation. The challenge with that is that often a single utterance doesn’t provide enough context to effectively retrieve a high quality answer.

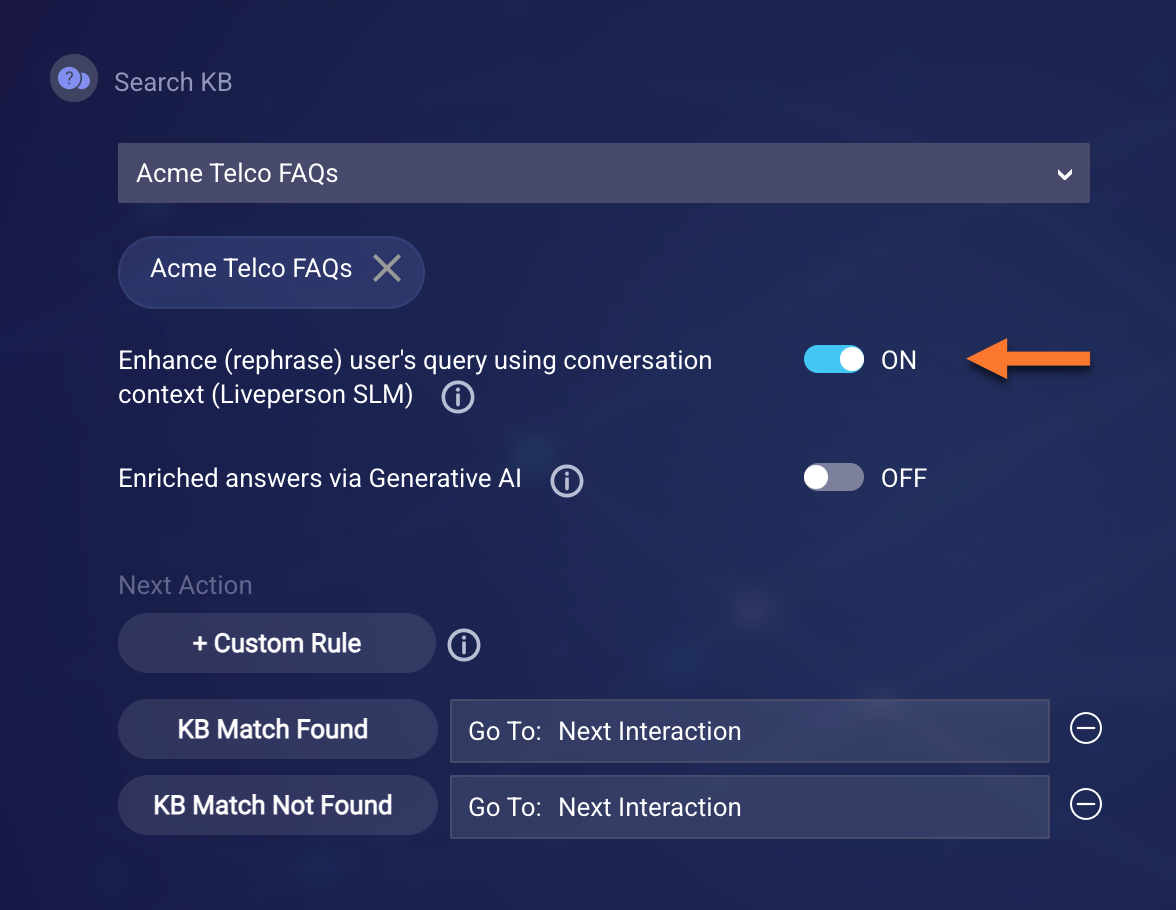

To solve this issue, you can now configure the relevant knowledge interaction so that KnowledgeAI™’s query contexualization is performed before running the knowledge base search.

Query contexualization rephrases the consumer’s query using some “turns” in the conversation context. The enhanced query is then used to find matching articles in the knowledge base(s). The result? Much better answers are returned.

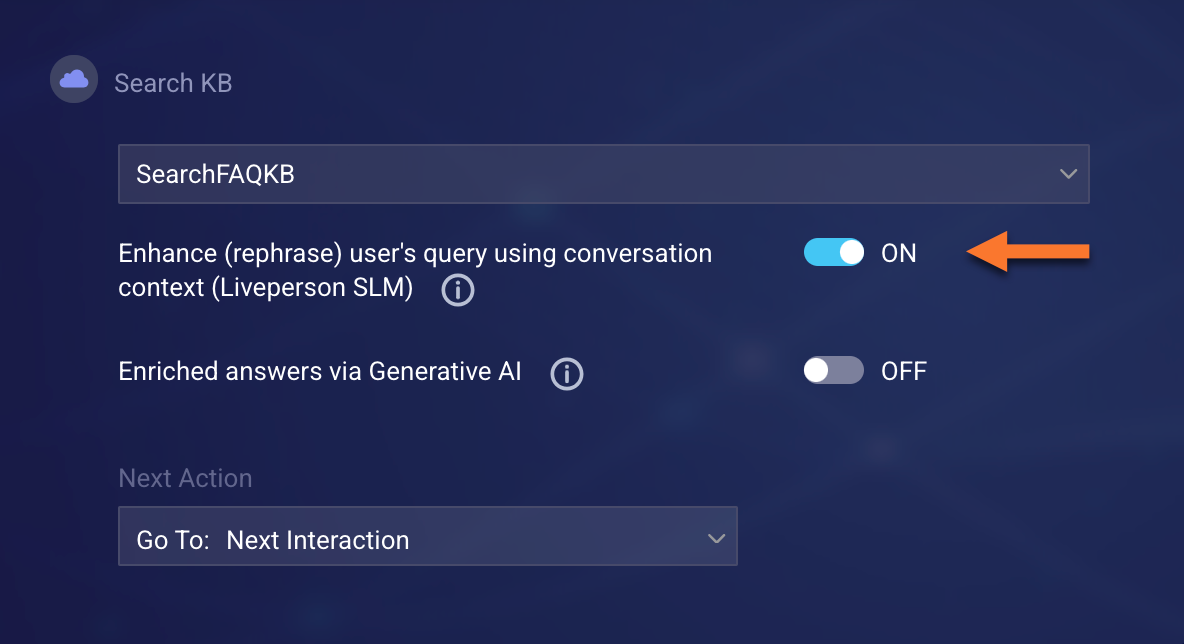

You can turn on query contextualization in a KnowledgeAI interaction:

You can also turn on query contexualization in an Integration interaction whenever the selected integration is of type KnowledgeAI:

FAQs

Can both messaging bots and voice bots use KnowledgeAI’s query contextualization?

Absolutely. Messaging bots typically use the KnowledeAI interaction (first image above), while Voice bots typically use the Integration interaction (second interaction above). Both interactions have the new setting to turn on query contextualization.

Do I need to be using LLMs and Generative AI to take advantage of this feature?

No. Query contextualization can be used even when you aren’t using LLMs and Generative AI.

Query contextualization is an optional process that’s run on the user’s query before the knowledge base search. It doesn’t use an LLM. It uses a state-of-the-art, LivePerson small language model or SLM (a decoder) that’s fine-tuned for query contextualization tasks.

Can I customize the prompt that’s sent to the SLM?

No, this isn’t possible. The prompt is tailored to suit the model.

Conversation Builder - Customize intent disambiguation in Routing AI agents

Previously, Conversation Builder limited a Routing AI agent to a maximum of three intent disambiguation attempts, to optimize the consumer experience and prevent consumers from getting stuck in a disambiguation loop. This number wasn’t configurable.

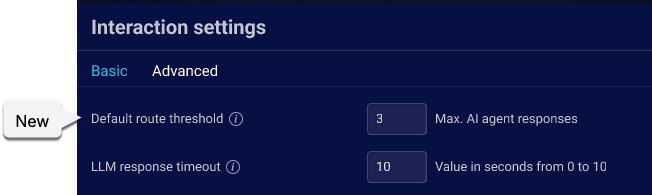

In this release, we add a new advanced setting named Default route threshold to the Guided Routing interaction:

Use this new setting to customize the maximum number of disambiguation attempts that can be made by the Routing AI agent, after which it sends your consumers to the default route. The default value is 3.

Conversation Builder - New scripting function to get a LivePerson service domain

Use the new getLPDomain function in a bot to dynamically retrieve the base URI for a given service.

Internally, this function makes a call to LivePerson’s Domain API. Within a bot, you can’t call that API directly, so we now offer this scripting function. Take advantage of it to avoid hard coding domains. And remember these best practices:

- Always check the output for null values and empty strings. While the call is retried, it’s still an external call that might fail.

- Never hard code a domain in your code; always retrieve it dynamically because the domains can change.

Conversation Builder - New scripting functions for storing entity-level context data in CCS

We are excited to announce the availability of new scripting functions in Conversation Builder to set and get contextual data (in the Conversation Context Service or CCS) based on an entity's unique identifier.

These new functions provide a flexible and customizable way to store and retrieve data associated with any uniquely identifiable unit that is relevant to your brand (an "entity").

New functions

setContextDataForEntity(namespace, entityId, name, value)setContextDataForEntity(namespace, entityId, properties)getContextDataForEntity(namespace, entityId, name)getContextDataForEntity(namespace, entityId)deleteContextDataForEntity(namespace, entityId, name);

Key benefits

- Use your own IDs: You can now use your brand's internal unique identifiers (the entityId)—such as your brand’s consumer ID, product SKU, order or shipment ID, or store or location ID—to segregate and manage data.

- Flexible context: Unlike other functions that manage data globally, per user (based on a Conversational Cloud ID), or per conversation, these new functions allow for highly flexible, entity-level data management.

- Improved performance and manageability: These entity-level functions offer a superior solution for storing consumer context, resolving the performance and manageability issues associated with previous approaches like relying on

setGlobalContextData to aggregate consumer-specific information.