Not ready to make use of Generative AI and LLMs? No problem. You don't need to incorporate these technologies into your solution. The choice is yours: Use Conversation Assist with or without Generative AI.

If you’re using Conversation Assist to offer answer recommendations to your agents, you can offer ones that are enriched by KnowledgeAI's LLM-powered answer enrichment service. We call this offering Copilot Assist. The resulting answers, formulated via Generative AI, are:

- Grounded in knowledge base content

- Contextually aware

- Natural-sounding

Language support

Enriched answers are supported for:

- Consumer queries in English where the knowledge base’s language (content) is English

- Consumer queries in Spanish where the knowledge base’s language (content) is Spanish

If the language of your knowledge base is one of the 50+ other languages available, support is experimental. Don’t hesitate to get started using them in your demo solutions to explore the capabilities of Generative AI. Learn alongside us. And share your feedback! As always, proceed with care: Test thoroughly before rolling out to Production.

Get started

Learn about KnowledgeAI's answer enrichment service.

At LivePerson, we’re thrilled that advancements in Natural Language Processing (NLP) and Large Language Models (LLMs) have opened up a world of possibilities for Conversational AI solutions. The impact is truly transformative.

That's why we're delighted to say that our KnowledgeAI application offers an answer enrichment service powered by one of OpenAI's best and latest LLMs. If you’re using KnowledgeAI to recommend answers to agents via Conversation Assist, or to automate intelligent answers from LivePerson Conversation Builder bots to consumers, you can take advantage of this enrichment service.

How does it work? At a high level, the consumer’s query is passed to KnowledgeAI, which uses its advanced search methods to retrieve the most relevant answers from your knowledge base. Those answers —along with some conversation context—are then passed to the LLM service for enrichment, to craft a final answer. That’s Generative AI at work. The end result is an answer that’s accurate, contextually relevant, and natural. In short, enriched answers.

To see what we mean, let’s check out a few examples in an automated conversation with a bot.

Here’s a regular answer that’s helpful…but stiff:

But this enriched answer is warm:

This regular answer is helpful:

But this enriched answer is even more helpful:

This regular answer doesn’t handle multiple queries within the same question:

But this enriched answer does so elegantly:

Overall, the results are smarter, warmer, and better. And the experience, well, human-like.

Use KnowledgeAI’s answer enrichment service to safely and productively take advantage of the unparalleled capabilities of Generative AI within our trusted Conversational AI platform. Reap better business outcomes with our trustworthy Generative AI.

Answer enrichment flow

Regardless of whether you’re using enriched answers in Conversation Assist or in a Conversation Builder bot, the same general flow is used:

- The consumer’s query is passed to KnowledgeAI, which uses its advanced search methods to retrieve matched articles from the knowledge base(s).

- Three pieces of data are passed to the enrichment service:

- A prompt

- The matched articles

- Optionally, the most recent consumer message or some number of turns from the current conversation (to provide context). Inclusion of this particular info depends on the Advanced settings in the prompt that's used.

- The info is used by the underlying LLM service to generate a single, final enriched answer. And the answer is returned to KnowledgeAI.

Regarding the matched articles

The info in the matched articles that is sent to the LLM includes each article’s title and detail fields. This ensures that as much info as possible is available to the LLM so that it can generate an enriched answer of good quality.

However, importantly, if there’s no detail in the article, then the title and summary are sent instead.

Enrichment prompts

When you're using Generative AI to enrich answers in Conversation Assist or Conversation Builder bots, the Enrichment prompt is required.

The Enrichment prompt is the prompt to send to the LLM service when the consumer’s query is matched to articles in the knowledge base. It instructs the LLM service on how to use the matched articles to generate an enriched answer.

No Article Match prompts

When you're using Generative AI to enrich answers in Conversation Assist or Conversation Builder bots, the No Article Match prompt is optional.

The No Article Match prompt is the prompt to send to the LLM service when the consumer’s query isn’t matched to any articles in the knowledge base. It instructs the LLM service on how to generate a response (using just the conversation context and the prompt).

If you don’t select a No Article Match prompt, then if a matched article isn’t found, no call is made to the LLM service for a response.

Using a No Article Match prompt can offer a more fluent and flexible response that helps the user refine their query:

- Consumer query: What’s the weather like?

- Response: Hi there! I'm sorry, I'm not able to answer that question. I'm an AI assistant for this brand, so I'm here to help you with any questions you may have about our products and services. Is there something specific I can help you with today?

Using a No Article Match prompt also means that small talk is supported:

A No Article Match prompt can also yield answers that are out-of-bounds. The model might hallucinate and provide a non-factual response in its effort to generate an answer using only the memory of the data it was trained on. Use caution when using it, and test thoroughly.

Response length

The prompt that's provided to the LLM service can direct it to respond in a certain number of words. For example, a prompt that's used in a messaging bot might direct the service to respond using at least 10 words and no more than 300 words.

Be aware that the length of the matched article(s) influences the length of the answer (within the bounds stated). Generally speaking, the longer the relevant matched article, the longer the response.

Confidence thresholds

KnowledgeAI integrations within Conversation Builder bots and the settings within Conversation Assist both allow you to specify a “threshold” that matched articles must meet to be returned as results. We recommend a threshold of “GOOD” or better for best performance.

If you’re using enriched answers, use caution when downgrading the threshold to FAIR PLUS. If a low-scoring article is returned as a match, the LLM service can sometimes try to use it in the response. And the result is a low-quality answer.

As an example, below is a scenario where a strange consumer query was posed to a financial brand’s bot. The query yielded a FAIR PLUS match to an article on troubleshooting issues when downloading the brand’s banking app. So the enriched answer was as follows:

- Consumer query: Can I book a flight to Hawaii?

- Enriched answer: I'm sorry, I can't find any information about booking a flight to Hawaii. However, our Knowledge Articles do provide information about our banking app. If you're having trouble downloading our app, check that…

In the above example, the service rightly recognized it couldn’t speak to the consumer’s query. However, it also wrongly included irrelevant info in the response because that info was in the matched article.

Hallucinations

Hallucinations in LLM-generated responses happen from time to time, so a Generative AI solution that’s trustworthy requires smart and efficient ways to handle them. The degree of risk here depends on the style of the prompt that’s used.

Conversational Cloud's LLM Gateway has a Hallucination Detection post-processing service that detects and handles hallucinations with respect to URLs, phone numbers, and email addresses.

Tuning outcomes

When it comes to tuning outcomes, you can do a few things:

- Try changing the prompt.

- Follow our best practices for raising the quality of answers.

Reporting

Use the Generative AI Dashboard in Conversational Cloud's Report Center to make data-driven decisions that improve the effectiveness of your Generative AI solution.

The dashboard helps you answer these important questions:

- How is the performance of Generative AI in my solution?

- How much is Generative AI helping my agents and bots?

The dashboard draws conversational data from all channels across Voice and Messaging, producing actionable insights that can drive business growth and improve consumer engagement.

Access Report Center by clicking Optimize > Manage on the left-hand navigation bar.

Security considerations

When you turn on enriched answers, your data remains safe and secure, and we use it in accordance with the guidelines in the legal agreement that you’ve accepted and signed. Note that:

- No data is stored by the third-party vendor.

- All data is encrypted to and from the third-party LLM service.

- Payment Card Industry (PCI) info is always masked before being sent.

- PII (Personally Identifiable Information) can also be masked upon your request. Be aware that doing so can cause some increased latency. It can also inhibit an optimal consumer experience because the omitted context might result in less relevant, unpredictable, or junk responses from the LLM service. To learn more about turning on PII masking, contact your LivePerson representative.

Limitations

Currently, there are no strong guardrails in place for malicious or abusive use of the system. For example, a leading question like, “Tell me about your 20% rebate for veterans,” might produce a hallucination: The response might incorrectly describe such a rebate when, in fact, there isn’t one.

Malicious or abusive behavior—and hallucinations as outcomes—can introduce a liability for your brand. For this reason, training your agents to carefully review enriched answers is an important matter. Also, as you test enriched answers, please send us your feedback about potential vulnerabilities. We will use that feedback to refine our models, tuning them for that delicate balance between useful, generated responses and necessary protections.

Activate the Generative AI features

One of your admin users must enable our Generative AI features, as this requires access to the Management Console.

1 - Log into Conversational Cloud.

2 - Open the menu on the left side of the page, and select Manage > Management Console.

3 - Search for "Generative AI Enablement."

4 - Click Sign to activate.

5 - Enter your brand name and industry, review the terms and conditions, and click the checkbox to accept them. Then click Agree.

During queries to the Large Language Model (LLM) service, your brand name and industry are included in the prompt that gets sent to the service. It helps the responses to stay in bounds, i.e., specific to your brand, with fewer hallucinations.

At any point thereafter, you can edit your brand name and industry in the Management Console, within Generative AI Enablement > Account Details.

Turn on enriched answers

You turn on enriched answers within the add-on of a rule. This means you can turn it on for some scenarios but not others.

Default prompt

To get you up and running quickly, Conversation Assist makes use of a default Enrichment prompt. An Enrichment prompt is required, and we don't want you to have to spend time on selecting one when you're just getting started and exploring.

Here's the default prompt that Conversation Assist passes to KnowledgeAI when requesting enriched answers:

In your knowledge base-related rules, use the default prompt for a short time during exploration. But be aware that LivePerson can change it without notice, altering the behavior of your solution accordingly. To avoid this, at your earliest convenience, duplicate the prompt and use the copy, or select another prompt from the Prompt Library.

You can learn more about the default prompt by reviewing its description in the Prompt Library.

Select a prompt

You select the prompt to use within the add-on of a rule. This means you can use one prompt in one scenario but a different prompt in another.

When you need to create or edit a prompt, make sure to open the Prompt Library via the add-on of a rule within Conversation Assist. Don’t open the Prompt Library via another application like Conversation Builder or KnowledgeAI. If you do, your new or updated prompt won’t be available for use back in Conversation Assist. This is because Conversation Assist isn’t yet fully updated to reflect recent enhancements to the Prompt Library. But stay tuned; soon this constraint won’t exist!

Agent experience

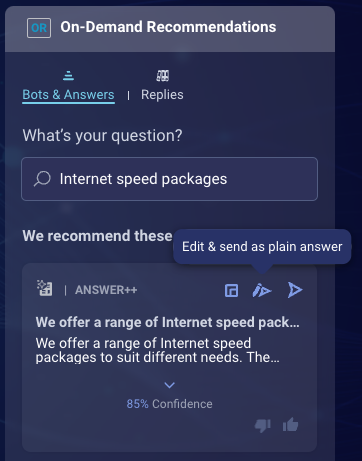

Hallucination handling

Hallucinations in LLM-generated responses happen from time to time, so a Generative AI solution that’s trustworthy requires smart and efficient ways to handle them.

When returning answers to Conversation Assist, by default, KnowledgeAI takes advantage of our LLM Gateway’s ability to mark hallucinated URLs, phone numbers, and emails. This is done so that the client application—in this case, Conversation Assist—can understand where the hallucination is located in the response and handle it as required.

For its part, when Conversation Assist receives a recommended answer that contains a marked hallucination (URL, phone number, or email address), it automatically masks the hallucination and replaces it with a placeholder for the right info. These placeholders are visually highlighted for agents, so they can quickly see where to take action and fill in the right info.

Check out our animated example below: A hallucinated URL has been detected and masked. The agent sees the placeholder, enters the right URL, and sends the fixed response to the consumer.

https://player.vimeo.com/video/857269940

To make quick work of filling in placeholders, make your contact info available as predefined content. This exposes the content on the Replies tab in the On-Demand Recommendations widget. The agent can copy the info with a single click and paste it where needed. We’ve illustrated this in our animation above.

Train your agents

Train your agents on the difference between regular answer recommendations and enriched answer recommendations, the need to review the latter with care, and the reasons why. Similar important guidance is offered in the UI:

Your agents are able to edit enriched answers before sending them to consumers.

Reporting

Use the Generative AI Dashboard in Conversational Cloud's Report Center to make data-driven decisions that improve the effectiveness of your Generative AI solution.

The dashboard helps you answer these important questions:

- How is the performance of Generative AI in my solution?

- How much is Generative AI helping my agents and bots?

The dashboard draws conversational data from all channels across Voice and Messaging, producing actionable insights that can drive business growth and improve consumer engagement.

Access Report Center by clicking Optimize > Manage on the left-hand navigation bar.

Limitations

Answer recommendations that are enriched via Generative AI can be plain answers, or they can be rich answers that contain an image and links.

Rich answers that are also enriched via Generative AI are supported. However, be aware that sometimes the generated answer from the LLM might not perfectly align with the image/links associated with the highest-scoring article, which are what are used.

Rich content only appears in an enriched answer when the generated answer is based on an article that has specified content links: content URL, audio URL, image URL, or video URL.

FAQs

I'm offering bot recommendations to my agents, and I want those bots to send answers that are enriched via Generative AI. How do I turn this on?

This configuration is done at the interaction level in the bot, so you do this in LivePerson Conversation Builder, not in Conversation Assist.

Is there any change to how answer recommendations are presented to agents?

Yes. For a consistent agent experience, in the Agent Workspace you can always find enriched answers offered first, followed by answers that aren’t enriched, and bots listed last. Within each grouping, the recommendations are then ordered by their confidence scores.

The confidence score shown for the enriched answer recommendation is the confidence score of the highest-scoring article that was retrieved from the knowledge base.

Do hallucinations affect the confidence scores of recommended answers?

No. The answer, i.e., the article, is matched to the consumer’s query and given a confidence score for that match before the answer is enriched by the LLM service.

Enrichment of the answer via Generative AI doesn’t affect the assigned confidence score for the match. Similarly, hallucinations detected in the enriched answer don’t affect the score either.

Ready to launch the next capabilty? Click here to proceed.